Solo Mining Virtual Currencies

Saturday 4th January, 2014 12:31 Comments: 0

If anyone is serious about solo mining, you're probably not reading this blog entry, but you've also bought some ASIC miners for the SHA256 based currencies (e.g. Bitcoin) or an AMD graphics card for scrypt based ones (e.g. Litecoin). If you're like me, you bought a new NVIDIA graphics card because the drivers have traditionally been a bit better on Windows and you want to play games; but you also like the idea of doing some mining on the side. I've needed a new graphics card for a while (my old GTX 275 was starting to look a bit dated, and struggled with newer games), and I'd been looking at the GTX 660Ti, but I've procrastinated for so long that I recently decided to go for the GTX 780.

Mining involves doing lots of complex calculations and every once in a while you'll manage to mine a block of coins. Depending on the difficulty and what hardware you have, doing this by yourself might mean going months (or even years?) without getting any credit. To help avoid this problem, a lot of "casual miners" appear to be using pools to mine coins. This allows a group of people to work together and then share the rewards. I'm incredibly greedy and don't like the idea of sharing (or, more precisely, I don't trust the mining pools to pay out, and it seems I might not have been paranoid).

Either way, you'll want to use the x86 version of cudaminer with your NVIDIA graphics card. I was quite happy running older versions, and the December 10th version seemed pretty good, and relying on autotune to detect some decent settings. Occasionally it would run slower than I expected, but I was happy. Then along came the December 18th version of cudaminer with better support for Kepler based cards (and, more recently, a warning that Fermi based devices "seem to run quite hot with this release"). I tried it. Autotune didn't seem to work, so I set some manual settings (based on what I'd read online) and I was indeed getting about 45% faster hashrates. For about 4 hours. Then my card died (it may have died before then, but I didn't notice immediately) and I had to go back to my 275 for a week. I'm fairly sure it was a faulty card, as it never got that hot and NVIDIA limits power usage and voltage to protect the card from killing itself - some people accuse it of being for commercial reasons, and they may have a point:

The only other major difference between the Titan and the GTX 780 comes down to raw Compute performance. In order to preserve the top-end offering's charms as a super-computer surrogate, double-precision floating performance (FP64) has been ruthlessly nerfed, giving Titan a huge advantage here. However, for our main purposes - gameplay - there are no savage cutbacks at all, and bearing in mind the cost saving, it's really difficult to recommend Titan at all now.

Anyway, this morning I received a replacement card (that doesn't produce an electrical burning smell or generate crackling noises) and I fired up my Feathercoin wallet. I tentatively ran cudaminer, as I really don't want to kill another graphics card (it's inconvenient to replace and although I'm 99% sure it was a faulty card, I can't help worry that it might have been my fault). To play a little bit safer, this time I added a new line to my batch file:

setx GPU_MAX_ALLOC_PERCENT 100

I used the manual settings I'd come up with for the December 18th version with the December 10th version. I got some unusually high numbers back (around 1100 khash/s):

[2014-01-04 10:57:12] GPU #0: GeForce GTX 780, 12288 hashes, 31.51 khash/s

[2014-01-04 10:57:13] GPU #0: GeForce GTX 780, 159744 hashes, 682.67 khash/s

[2014-01-04 10:57:16] GPU #0: GeForce GTX 780, 3416064 hashes, 1112 khash/s

[2014-01-04 10:57:21] GPU #0: GeForce GTX 780, 5566464 hashes, 1101 khash/s

[2014-01-04 10:57:26] GPU #0: GeForce GTX 780, 5517312 hashes, 1088 khash/s

[2014-01-04 10:57:31] GPU #0: GeForce GTX 780, 5443584 hashes, 1097 khash/s

[2014-01-04 10:57:36] GPU #0: GeForce GTX 780, 5492736 hashes, 1129 khash/s

This is twice as fast as the numbers I saw with the December 18th version. I briefly tried the newer version just to be sure:

[2014-01-04 10:57:58] GPU #0: GeForce GTX 780, 12288 hashes, 41.46 khash/s

[2014-01-04 10:57:58] GPU #0: GeForce GTX 780, 208896 hashes, 515.03 khash/s

[2014-01-04 10:58:03] GPU #0: GeForce GTX 780, 2580480 hashes, 536.73 khash/s

[2014-01-04 10:58:08] GPU #0: GeForce GTX 780, 2691072 hashes, 530.36 khash/s

I stopped cudaminer pretty quickly to be on the safe side, but I was indeed seeing about half the hashrate (around 530 khash/s) using exactly the same commands. I thought I'd seen a 45% improvement last weekend (admittedly without the GPU_MAX_ALLOC_PERCENT setting), but now I'm seeing the opposite way around.

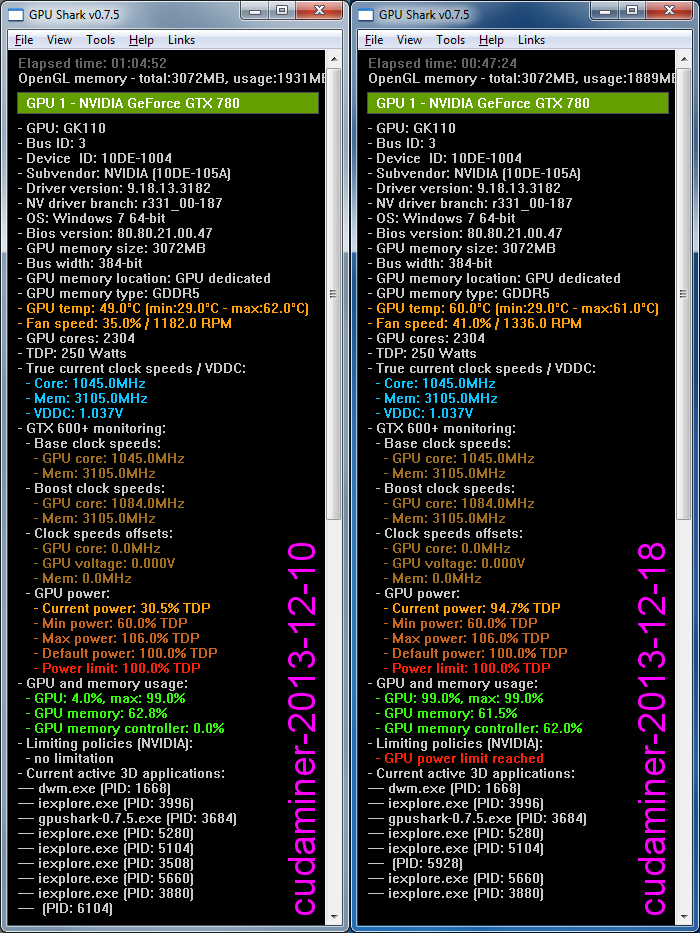

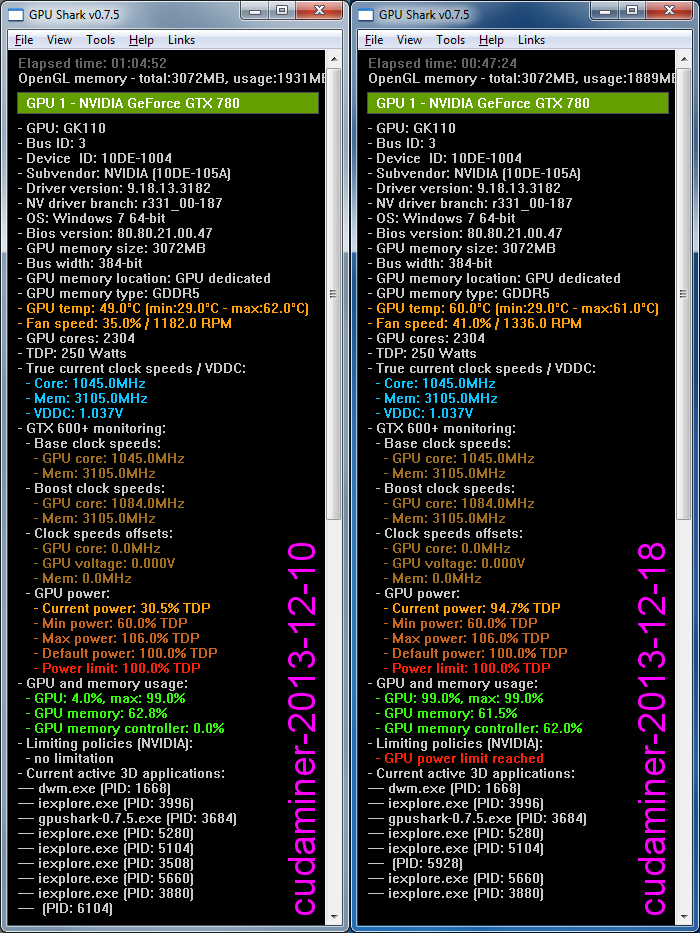

I then looked at the values in GPU Shark. It turns out the old version is not only faster today, but uses significantly less of pretty much everything!

It's using about a third of the power, doesn't use the GPU memory controller, barely uses the GPU, and because it's using fewer resources it's running a lot cooler.

At some point I'll see if the GPU_MAX_ALLOC_PERCENT makes a difference, but I'm quite happy with the rates I'm seeing with the old version and very happy with how cool the card is and how little power it's using. Whether it's working properly is another matter, I guess I'll find out the next time I solo mine a block. I'm genuinely concerned that I've done something wrong as it looks too good to be true, but I have heard of people running with settings that should be slower that have resulted in better performance as it sounds like certain operations work much better when things are in sync. At the current difficulty and hashrate, that might only take me 6 days to find out!

I just hope Feathercoins go up in value at some point. I'm not convinced they will, but who knows.

EDIT: I've just noticed that my CPU usage is pretty high, around 85%, thanks to cudaminer. I don't remember it being that high before, but I suppose if it's hashing at more than double what I had before I'll potentially use twice as much CPU power as I'm offloading the small amount of SHA256 hashing to my multicore CPU (--hash-parallel 2).

For reference, this is the command I'm running (TCP port and credentials have been obfuscated):

"C:\Program Files (x86)\Feathercoin\cudaminer-2013-12-10\x86\cudaminer.exe" --url=http://127.0.0.1:xxxxx --userpass=xxxxx:xxxxx -m 1 -H 1 -i 0 -l T12x32

EDIT2: It appears that the T kernel is best for my GTX 780 (compute 3.5), rather than the K (Kepler) kernel (compute 3.0). Maybe the December 18th version, which uses CUDA 5.5 with the new kernel, isn't as efficient as the CUDA 5.0 code? Perhaps it does more on the GPU than the CPU?

EDIT3: Further reading suggests that the best settings for cudaminer with a compute 3.5 device (Titan or 780) is the [number of SMX units]x[warp size] (14x32 for Titan and 12x32 for my 780).

EDIT4: It seems that the high hashrate and low GPU usage are indicators that something is wrong. I ended up running the latest cudaminer with the settings "-i 0 -m 1 -D -H 1 -l T12x32" and this seemed to run pretty well (over 500khash/s) without causing the voltage limit to kick in (power limit would kick in). It does seem to put a lot of stress on the card though, but in theory it should be designed to handle it. The fans don't seem to have gone very high, and the temperature of the GPU isn't too bad.

Mining involves doing lots of complex calculations and every once in a while you'll manage to mine a block of coins. Depending on the difficulty and what hardware you have, doing this by yourself might mean going months (or even years?) without getting any credit. To help avoid this problem, a lot of "casual miners" appear to be using pools to mine coins. This allows a group of people to work together and then share the rewards. I'm incredibly greedy and don't like the idea of sharing (or, more precisely, I don't trust the mining pools to pay out, and it seems I might not have been paranoid).

Either way, you'll want to use the x86 version of cudaminer with your NVIDIA graphics card. I was quite happy running older versions, and the December 10th version seemed pretty good, and relying on autotune to detect some decent settings. Occasionally it would run slower than I expected, but I was happy. Then along came the December 18th version of cudaminer with better support for Kepler based cards (and, more recently, a warning that Fermi based devices "seem to run quite hot with this release"). I tried it. Autotune didn't seem to work, so I set some manual settings (based on what I'd read online) and I was indeed getting about 45% faster hashrates. For about 4 hours. Then my card died (it may have died before then, but I didn't notice immediately) and I had to go back to my 275 for a week. I'm fairly sure it was a faulty card, as it never got that hot and NVIDIA limits power usage and voltage to protect the card from killing itself - some people accuse it of being for commercial reasons, and they may have a point:

The only other major difference between the Titan and the GTX 780 comes down to raw Compute performance. In order to preserve the top-end offering's charms as a super-computer surrogate, double-precision floating performance (FP64) has been ruthlessly nerfed, giving Titan a huge advantage here. However, for our main purposes - gameplay - there are no savage cutbacks at all, and bearing in mind the cost saving, it's really difficult to recommend Titan at all now.

Anyway, this morning I received a replacement card (that doesn't produce an electrical burning smell or generate crackling noises) and I fired up my Feathercoin wallet. I tentatively ran cudaminer, as I really don't want to kill another graphics card (it's inconvenient to replace and although I'm 99% sure it was a faulty card, I can't help worry that it might have been my fault). To play a little bit safer, this time I added a new line to my batch file:

setx GPU_MAX_ALLOC_PERCENT 100

I used the manual settings I'd come up with for the December 18th version with the December 10th version. I got some unusually high numbers back (around 1100 khash/s):

[2014-01-04 10:57:12] GPU #0: GeForce GTX 780, 12288 hashes, 31.51 khash/s

[2014-01-04 10:57:13] GPU #0: GeForce GTX 780, 159744 hashes, 682.67 khash/s

[2014-01-04 10:57:16] GPU #0: GeForce GTX 780, 3416064 hashes, 1112 khash/s

[2014-01-04 10:57:21] GPU #0: GeForce GTX 780, 5566464 hashes, 1101 khash/s

[2014-01-04 10:57:26] GPU #0: GeForce GTX 780, 5517312 hashes, 1088 khash/s

[2014-01-04 10:57:31] GPU #0: GeForce GTX 780, 5443584 hashes, 1097 khash/s

[2014-01-04 10:57:36] GPU #0: GeForce GTX 780, 5492736 hashes, 1129 khash/s

This is twice as fast as the numbers I saw with the December 18th version. I briefly tried the newer version just to be sure:

[2014-01-04 10:57:58] GPU #0: GeForce GTX 780, 12288 hashes, 41.46 khash/s

[2014-01-04 10:57:58] GPU #0: GeForce GTX 780, 208896 hashes, 515.03 khash/s

[2014-01-04 10:58:03] GPU #0: GeForce GTX 780, 2580480 hashes, 536.73 khash/s

[2014-01-04 10:58:08] GPU #0: GeForce GTX 780, 2691072 hashes, 530.36 khash/s

I stopped cudaminer pretty quickly to be on the safe side, but I was indeed seeing about half the hashrate (around 530 khash/s) using exactly the same commands. I thought I'd seen a 45% improvement last weekend (admittedly without the GPU_MAX_ALLOC_PERCENT setting), but now I'm seeing the opposite way around.

I then looked at the values in GPU Shark. It turns out the old version is not only faster today, but uses significantly less of pretty much everything!

It's using about a third of the power, doesn't use the GPU memory controller, barely uses the GPU, and because it's using fewer resources it's running a lot cooler.

At some point I'll see if the GPU_MAX_ALLOC_PERCENT makes a difference, but I'm quite happy with the rates I'm seeing with the old version and very happy with how cool the card is and how little power it's using. Whether it's working properly is another matter, I guess I'll find out the next time I solo mine a block. I'm genuinely concerned that I've done something wrong as it looks too good to be true, but I have heard of people running with settings that should be slower that have resulted in better performance as it sounds like certain operations work much better when things are in sync. At the current difficulty and hashrate, that might only take me 6 days to find out!

I just hope Feathercoins go up in value at some point. I'm not convinced they will, but who knows.

EDIT: I've just noticed that my CPU usage is pretty high, around 85%, thanks to cudaminer. I don't remember it being that high before, but I suppose if it's hashing at more than double what I had before I'll potentially use twice as much CPU power as I'm offloading the small amount of SHA256 hashing to my multicore CPU (--hash-parallel 2).

For reference, this is the command I'm running (TCP port and credentials have been obfuscated):

"C:\Program Files (x86)\Feathercoin\cudaminer-2013-12-10\x86\cudaminer.exe" --url=http://127.0.0.1:xxxxx --userpass=xxxxx:xxxxx -m 1 -H 1 -i 0 -l T12x32

EDIT2: It appears that the T kernel is best for my GTX 780 (compute 3.5), rather than the K (Kepler) kernel (compute 3.0). Maybe the December 18th version, which uses CUDA 5.5 with the new kernel, isn't as efficient as the CUDA 5.0 code? Perhaps it does more on the GPU than the CPU?

EDIT3: Further reading suggests that the best settings for cudaminer with a compute 3.5 device (Titan or 780) is the [number of SMX units]x[warp size] (14x32 for Titan and 12x32 for my 780).

EDIT4: It seems that the high hashrate and low GPU usage are indicators that something is wrong. I ended up running the latest cudaminer with the settings "-i 0 -m 1 -D -H 1 -l T12x32" and this seemed to run pretty well (over 500khash/s) without causing the voltage limit to kick in (power limit would kick in). It does seem to put a lot of stress on the card though, but in theory it should be designed to handle it. The fans don't seem to have gone very high, and the temperature of the GPU isn't too bad.